Table of Contents

Multi-Task ICL September 2023

Learning shared safety constraints from multi-task demos.

Regardless of the particular task we want them to perform in an environment, there are often shared safety constraints we want our agents to respect. Manually specifying such a constraint can be both time-consuming and error-prone. We show how to learn constraints from expert demonstrations of safe task completion by extending inverse reinforcement learning (IRL) techniques to the space of constraints. Intuitively, we learn constraints that forbid highly rewarding behavior that the expert could have taken but chose not to.

Unfortunately, the constraint learning problem is rather ill-posed and typically leads to overly conservative constraints that forbid all behavior that the expert did not take. We counter this by leveraging diverse demonstrations that naturally occur in multi-task settings to learn a tighter set of constraints. We validate our method with simulation experiments on high-dimensional continuous control tasks.

Position Based Fluids December 2022

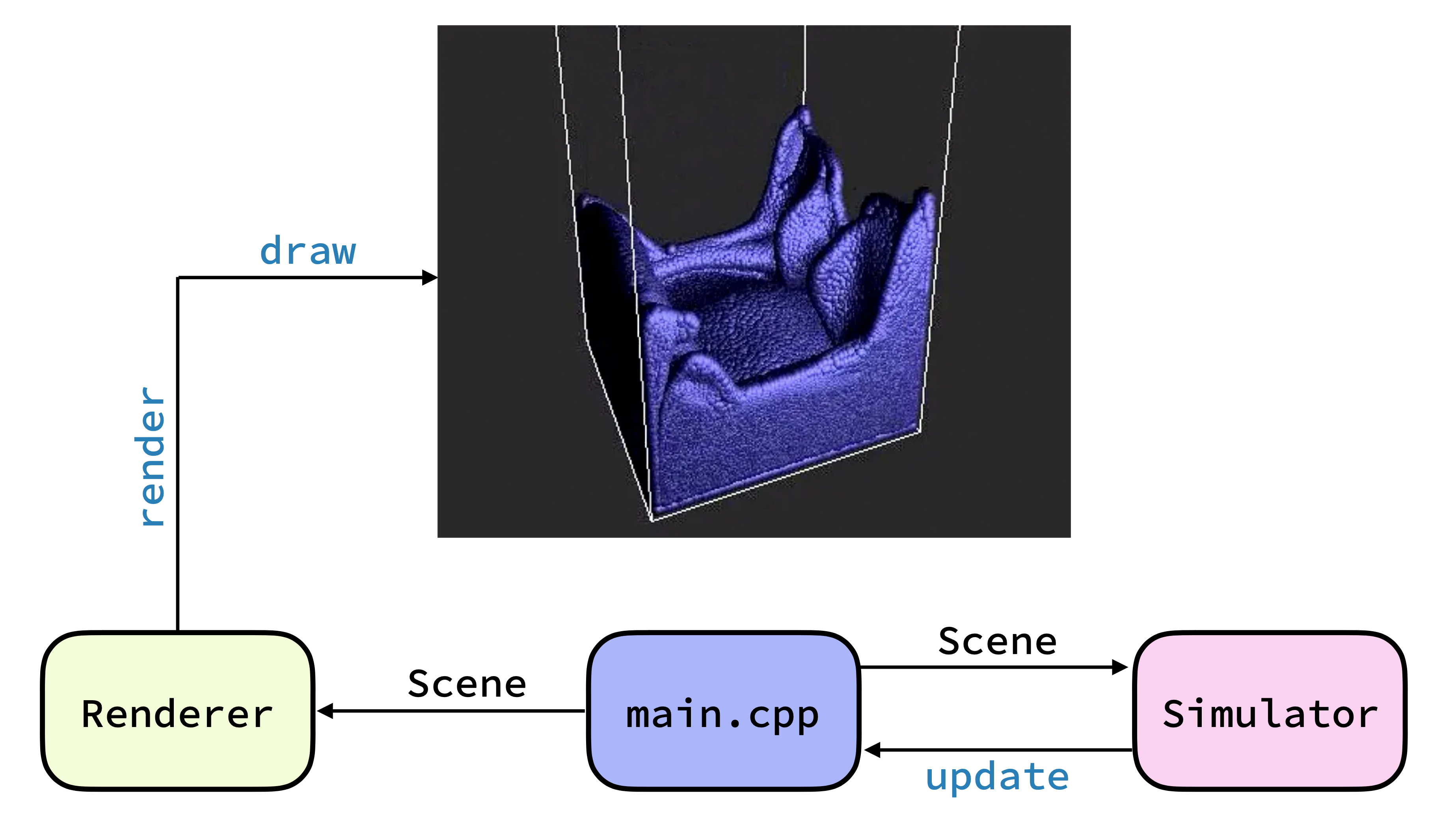

Parallel fluid simulation in CUDA.

This is a sequential and parallel position-based fluid simulator implemented in C++ and CUDA with a custom renderer in OpenGL. The parallel simulator supports data parallelism, efficient neighbor computation using parallel counting sort, and multi-threaded rendering. It achieves speedups of up to 30x over the sequential simulator and supports real-time simulation and rendering up to 100,000 particles.

How to Adapt CLIP December 2021

Efficient fine-tuning of large-scale pretrained models.

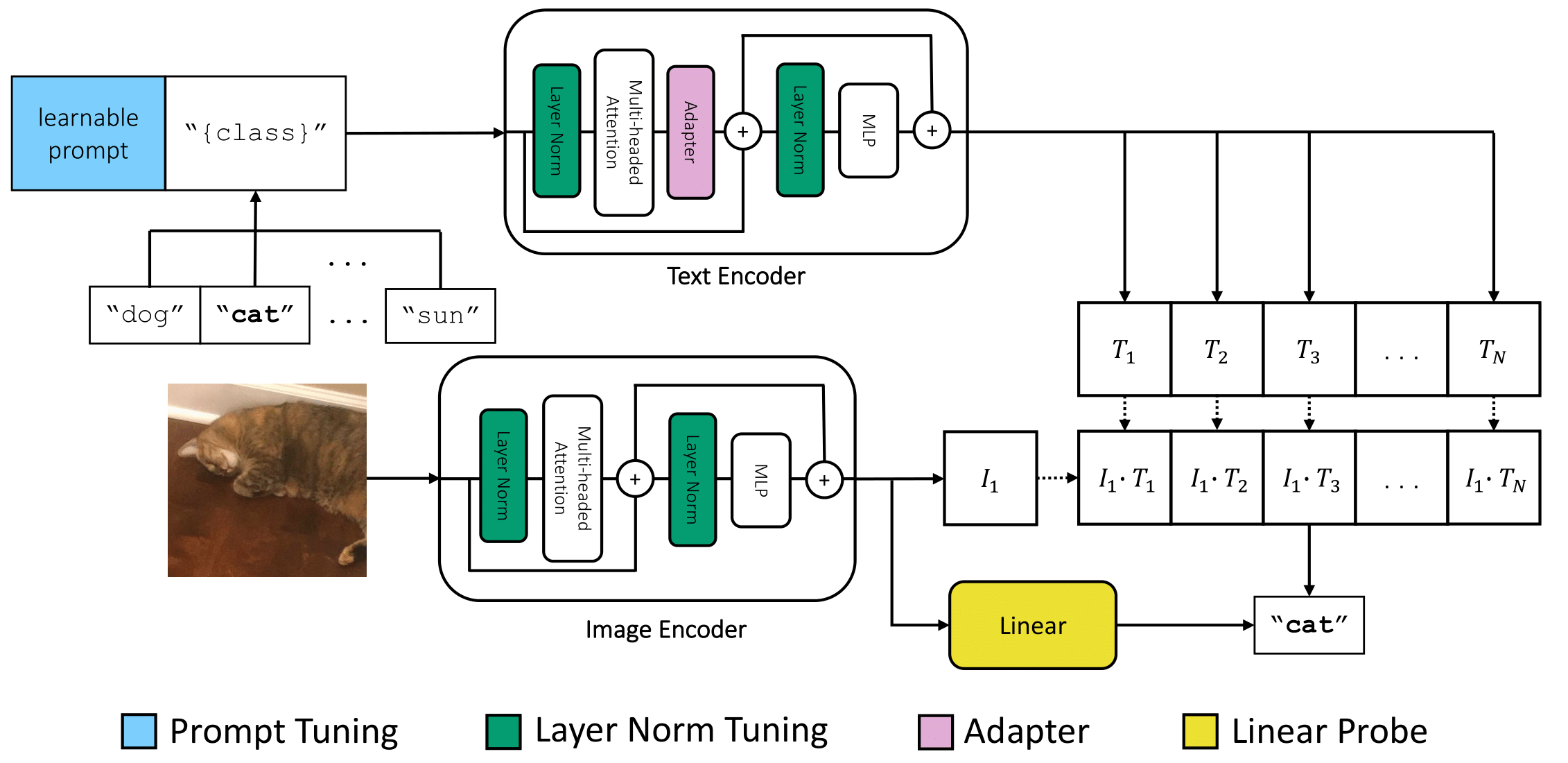

Pre-training large-scale vision and language models (e.g. CLIP) has shown promising results in representation and transfer learning. This research project (advised by Prof. Deepak Pathak, Dr. Igor Mordatch, and Dr. Michael Laskin) investigates the question of how to efficiently adapt these models to out-of-distribution downstream tasks. We analyze several fine-tuning methods for a diverse set of image classification tasks across two spectra — the amount and similarity of the downstream and pretraining data.

Our primary contribution is to show that just tuning LayerNorm paramters is a surprisingly effective baseline across the board. We further demonstrate a simple strategy for combining LayerNorm-tuning with general fine-tuning methods to improve their performance and benchmark them on few-shot adaptation and distribution shift tasks. Finally, we provide an empirical analysis and recommend general recipes for efficient transfer learning of CLIP-like models.

Invert and Factor May 2021

Unsupervised, interpretable image editing.

This is an image editing web interface combining GAN inversion with semantic factorization. Users can upload an image of a face to the interface and modify it by manipulating sliders which correspond to semantic attributes.

Semantic factorization provides semantically meaningful directions in the latent space of a generative model in a fast and unsupervised manner. Combining this with a pretrained inversion model and StyleGan2 generator allows for an uploaded image to be inverted, factorized, manipulated, and re-generated in real-time.

Links: Website